Beyond Compute: A New Governance Logic for the AI Value Chain (Part II)

From diffusion controls to standards.

This is the second installment of a two-part essay. You can read the first part here, though this part is written to stand on its own.

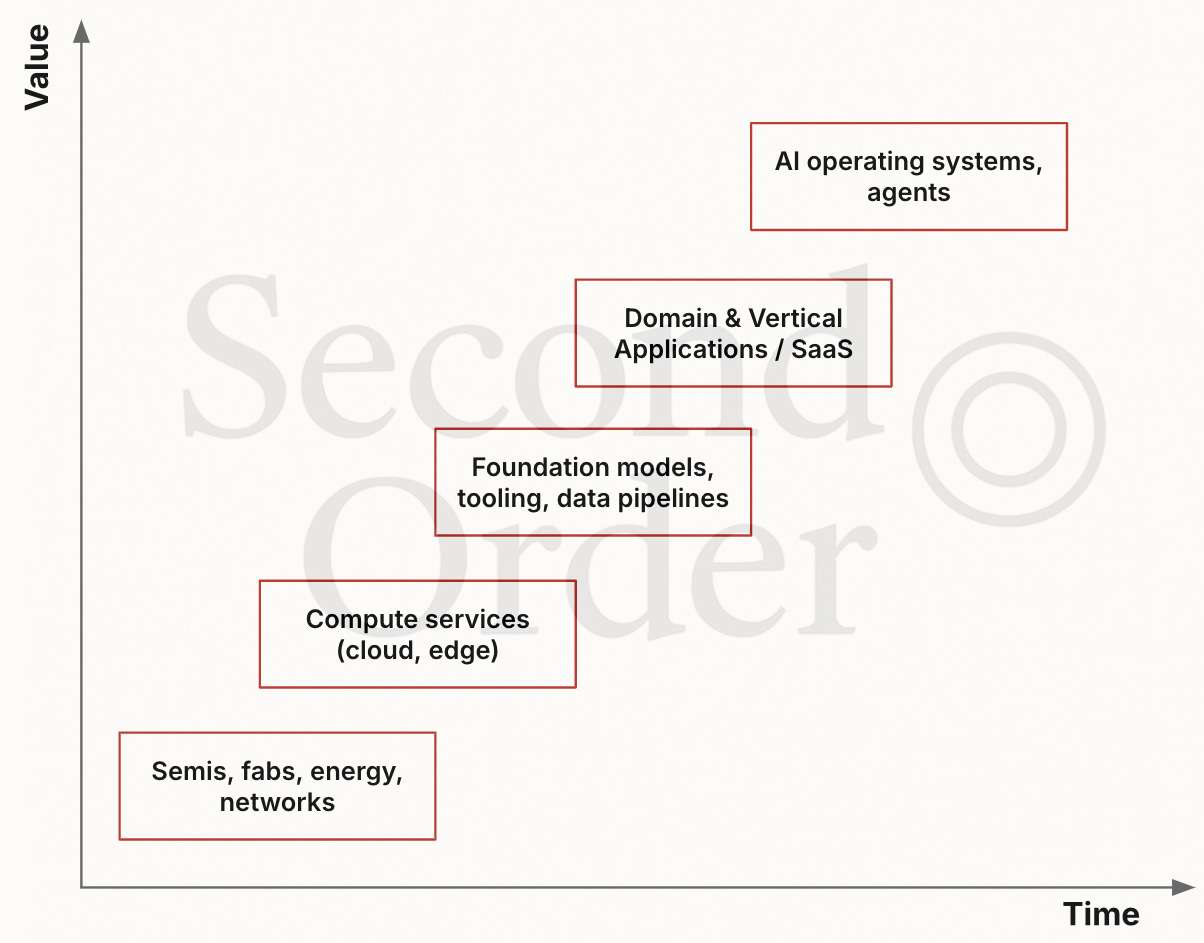

In the first part of this essay, we saw how the commoditization of models and chips weakens compute-based measures to restrict the diffusion of AI technology. Trade controls aiming only at choking compute are likely to miss the crux of future harm and economic value that AI will generate.

Compute will, of course, remain crucial for training frontier models and as a geopolitical flashpoint, but the locus of risk and value is already migrating elsewhere. Consider OpenAI, which recently released its gpt-oss open-weight models under Apache 2.0, explicitly designed for achieving parity with o4-mini and 4o on agentic workflows and chain-of-thought. Each model was trained using mixture-of-experts (MoE) and reduced token activation at inference, similarly to DeepSeek and Kimi K2. Open weights further lower barriers, making distribution and platforms the next moat and more important than marginal model performance gains (gpt-oss showed up across AWS, Azure, and IBM within days of its release).

But as competitive value shifts from models to other segments of the AI value chain, a new governance logic is necessary, since top-down, Cold War-era restrictions on diffusion are increasingly incompatible with AI’s decentralized footprint.

From diffusion control to evaluation science

A testing and evaluation-first approach closes the gap between policy aspirations and engineering realities, and reframes safety as a set of empirical requirements that are observable, repeatable, and interoperable.

Interoperability can help turn safety from a cost center to a revenue accelerator: (1) decreased compliance cost, since one battery of tests can satisfy multiple jurisdictions; (2) decrease in bespoke auditing; (3) creation of new markets for third-party test labs, benchmark publishers, evaluation-as-a-service, and post-deployment monitoring providers, etc. which in turn facilitates entreprise procurement where evaluation attestations are required.

The July 2025 White House AI Action Plan partly supports this. The plan prioritizes infrastructure build-out (e.g., chips, data centers) and tasks NIST and CAISI with evaluation initiatives, standards work, and regulatory sandboxes. The rebranding of the U.S. AI Safety Institute to the Center for AI Standards and Innovation (CAISI) signals an emphasis on standards and competitiveness over “safety” branding, which heightens the need for interoperable testing and evaluation standards.

Closing the policy-engineering divide remains an enduring challenge. A stepping stone may be to define a global “minimum viable interoperability” standard, and host its requirements in technical annexes that regulators can revise more quickly than statutes (similarly to the Wassenaar Arrangement control list, which currently counts 42 member states, and very much China’s current modus operandi as we’ll see below).

A better logic…

Unlike the arms race logic, this logic is evidence-based rather than speculative end-uses. It steers us away from the notion that compute is a universal risk proxy in an era where smaller, efficient models can create real hazards once packaged into consumer and enterprise products. The critical takeaway here is that the analysis must be performed on the system as deployed, not just the base model, since risks often emerge post-deployment as the model acquires resources and context, and scales.

The most relevant approach today to testing and evaluation methods (in my view) is the U.K. AI Safety Institute’s, which adopts a multi-method evaluation approach blending automated tests, red-teaming, human-subject studies, and agent evaluations to probe areas like cyber offense, bio and chemical weapons development, agentic resource acquisition, and societal impacts.

Political content and ideological control aside, some elements of China’s procedural approach in testing and evaluation are compelling and match the description of an operational approach to testing and evaluation:

Pre‑release security assessments for high‑impact public deployments, with lighter‑touch filings for low‑risk, internal, or research uses (2023 Interim Measures for Generative AI);

Service‑level registries that publish filed systems and risk attestations so results travel across jurisdictions and reduce duplicate audits (2024 Administrative Measures for Generative Artificial Intelligence Services);

Labeling/watermarking and traceability hooks for synthetic media to support incident response and recalls (2025 Measures for Labeling Artificial Intelligence-Generated Content);

A small set of official benchmarks and leaderboards run by accredited labs is referenced in technical annexes, so the criteria can evolve quarterly. Here’s why, according to RAND:

“These labs blur the line between private- and public-sector AI development in China, with state-backed labs supporting both Chinese government programs and private-sector AI development.”

Testing and evaluation methods globally include automated capability tests, adversarial red-teaming, and hardware-in-the-loop simulations. China excels at operationalizing these through mandatory and recommended national standards (GB, GB/T) and technical documents (TC), which provide a common measurement surface that updates faster than formal law.

… with its set of flaws.

Yet the path to global coordination and interoperability is far from straightforward. High-level principles, such as the G7 AI Principles and Code of Conduct (also referred to as the G7 Hiroshima Process), provide important norms on safety and fairness, but they often stop short of implementation detail and remain voluntary. Moreover, divergences in philosophies on regulating digital technologies among the U.S., E.U., and China result in different risk definitions and thresholds, which in turn translate into incompatible compliance and system design requirements.

Ontologies are the primary sticking point: there is not yet a global scientific consensus on how to measure certain capabilities or how to adjudicate contested concepts such as human-level reasoning. Compute thresholds are certainly a measure of this, but as mentioned above, weak indicators of risk, since risks often emerge post-deployment as the model acquires resources and context. And thresholds depend on precise outcomes and metrics.

The standards landscape is also fragmented. Organizations like ISO, IEEE, ETSI, NIST, and CEN-CENELEC are publishing overlapping standards that emphasize different challenges testing and evaluation should address. While there have been calls for an “IAEA for AI,” I think an ICAO-like agency for AI could be well-suited to developing a “minimum viable interoperability” requirement for current standards, or a new global standard altogether:

“The ICAO’s activities have included establishing and reviewing international technical standards for aircraft operation and design, crash investigation, the licensing of personnel, telecommunications, meteorology, air navigation equipment, ground facilities for air transport, and search-and-rescue missions. The organization also promotes regional and international agreements aimed at liberalizing aviation markets, helps to establish legal standards to ensure that the growth of aviation does not compromise safety, and encourages the development of other aspects of international aviation law.”

Chasing externalities in the AI product era

Earlier, I wrote that the locus of risk and value is already migrating from raw model capability towards distribution, with frontier model companies already evolving into vertically integrated product and platform companies.

Embedding models into products and massive user bases generates externalities that can’t necessarily be seen in training as the product meets users and tools, and with it, system access, permissions, distribution, etc.

Now, imagine a single company controlling models, agent framework, applications, and third-party integrations. This concentrates risk into a single point of failure, where negative economic, security, and social externalities are harder to mitigate with post-factum regulatory action.

As we saw with social media regulation, an onslaught of regulatory and policy burdens after products have been deployed at scale and found their teeth in the global economy is a surefire way of missing the shift and the negative externalioties that come with products hitting market with limited legal and regulatory scrutiny (few anticipated back in 2007 the extent to which Facebook would influence elections, public health, cultural zeitgeist, entertainment, geopolitics, frontier technology development, etc.)

A useful analogy is competition law in the United States. The Sherman Act and the Clayton Act were elaborated a century before the Internet and platform economics, and enforcement doctrines struggled to anticipate the rise of aggregator companies built on user-driven network effects. As Lina Khan famously argued in her Yale Law Journal article, “Amazon’s Antitrust Paradox”, current legal tools have missed how new business models can entrench market power while passing conventional competition tests.

The lesson here is that AI governance should pre-emptively follow how moats form: through distribution, workflow integration, and platform control, i.e., where externalities unforeseen during training surface. That requires moving from post-factum regulation to global testing and evaluation from models to product-oriented assessments that can mitigate risk as the model acquires resources and context, and scales to a massive user base.

Where can we look?

The new focus could build upon a well-established and efficient model found in biotechnology and pharmaceuticals, declined in four pillars: pre‑deployment evaluations; post‑market monitoring, tiered scrutiny based on risk; and a central international body in charge of common ontologies, methodologies, and testing protocols.

In pharmaceuticals, pre‑deployment evaluation follows a multi‑phase testing sequence (Phase 1, 2, 3 trials) to assess safety. Post‑market surveillance monitors adverse events and adapts regulatory decisions. Applying these principles to AI suggests that third‑party testing systems should include:

Pre‑deployment evaluations: similar to clinical trials, involving red‑team testing, human‑subject assessments, and agent‑based evaluations. Models intended for sensitive uses (e.g., drug discovery) would require independent assessment of potential misuses (e.g., synthesising harmful biological agents);

Post‑market monitoring: continuous collection, detection, assessment, monitoring, and prevention of adverse effects of AI systems in the field—similar to pharmacovigilance;

Tiered risks: risk‑based tiers could determine the level of testing, broadly used in gene editing technologies, which, like agentic systems, can self-propagate (an international body could mandate benchmarks for off‑target prediction and require third‑party labs to replicate results for validation)

An international standard and testing body: the International Council for Harmonisation of Technical Requirements for Pharmaceuticals for Human Use (ICH) brings regulators and industry together to set common guidelines on definitions, statistical methodologies, and testing protocols suited for “minimum viable interoperability.”

The diffusion of AI risk and value beyond compute requires a shift from hardware-based control to system-level governance. A harmonized, testing and evaluation-first approach may be the most underexplored opportunity for balancing global AI safety and competitiveness. Governance must follow where moats form and externalities emerge as AI is increasingly packaged into products, risk concentrates in integration, distribution, and scale. Developing interoperable standards is essential not only to manage this evolution, but also to avoid repeating the errors made with digital technology regulation with one that is already proliferating faster than any other in history.