Beyond Compute: A New Governance Logic for the AI Value Chain (Part I)

From diffusion controls to standards.

Over the last weeks, a series of global developments has signaled a turning point in global efforts to manage AI proliferation.

The European Union released a new voluntary code of practice to clarify the implementation of its AI Act. NVIDIA secured approval to resume shipments of its H20 chips to China, rolling back the export controls introduced by the Trump administration last April. Moonshot AI launched Kimi K2, a trillion-parameter, open-source model that outperforms GPT 4o and Claude 4 on multiple benchmarks. Thinking Machines raised USD 12b from major investors on the promise of multimodal agentic systems and open-source components for researchers and startups.

These events alone point to the current collective action problem around AI diffusion, where Cold War-style, top-down strategic controls are increasingly ineffective against this frontier technology’s global footprint. Nuclear’s state-controlled, zero-sum, and rivalrous logic is fundamentally maladapted to AI’s decentralized, multipolar, iterative footprint.

Deployment is outpacing ahead of governance. The U.S., EU, and China are developing divergent regulatory regimes and standards, dual-use controls. Multilateral organizations and agreements remain fragmented. In addition, business incentives are diverging even faster from these governance efforts. In the current AI bubble, companies are under pressure to ship as fast as humanly possible, making AI safety a drag on deployment and therefore revenue.

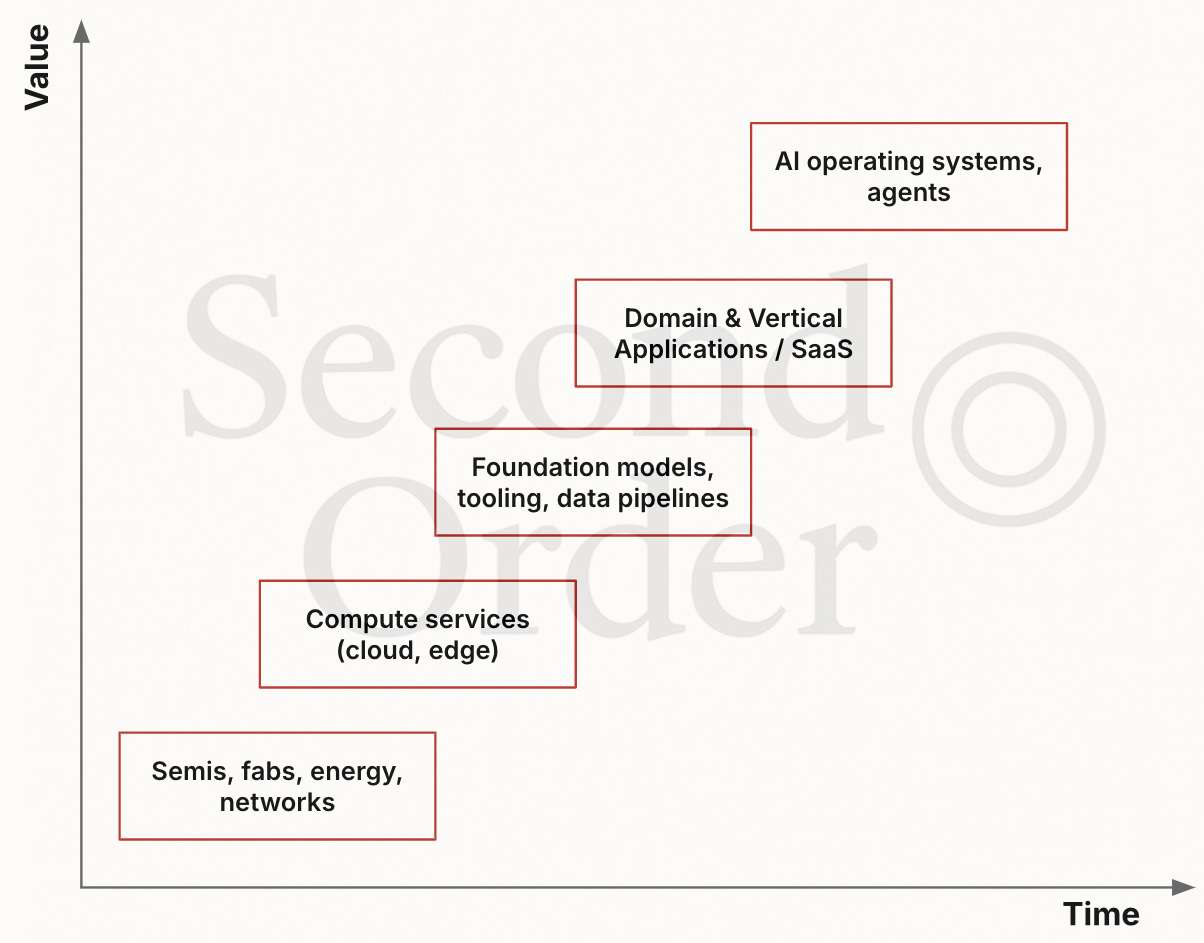

In this essay, I provide an overview of (1) current approaches to restricting AI diffusion; (2) how, as frontier capabilities commoditize, competition and value will shift from compute and power to consumer applications and to companies’ ability to vertically integrate the AI value chain; and (3) how policy must adopt a new governance logic to anticipate this shift, reducing the risk of destabilizing the very sectors AI is meant to transform.

The modern diffusion problem

Historically, transformative technologies have spread through a top-down diffusion path, initially controlled (and/or funded) by military or government, before being made available to corporations, and eventually to consumers. Foundational AI technology completely disrupts this path, landing in the hands of consumers, startups, and non-state actors simultaneously. As Andrej Karpathy put it:

“LLMs display a dramatic reversal of this pattern–they generate disproportionate benefit for regular people, while their impact is a lot more muted and lagging in corporations and governments.”

As we’ll see below, this lag is poised to increase given the current state of strategic technology trade restrictions.

The empirical anchor for this bottom-up reversal is clear. Consider ChatGPT, which attained 800 million MAUs since its launch in October 2022 — faster than any other tech product in history. This rapid adoption is enabled by exceptionally low barriers: consumer, general-purpose LLMs/AI-enabled services are free or cheap, increasingly multilingual, benefit from ubiquitous, global horizontal distribution channels (e.g. smartphones), and require no technical language proficiency for general-purpose use.

This accessibility contrasts starkly with previous strategic technologies with controlled diffusion (i.e., technologies under munitions lists, dual-use lists, international agreements, etc.) like nuclear or aerospace capabilities, which demanded specialized knowledge, infrastructure, and massive CAPEX.

Further to this point, AI tools proliferate at nearly zero marginal cost. Model weights, fine-tuning techniques, etc., are generally open and quickly propagate through networks like GitHub and Hugging Face, making models non-rivalrous and physically difficult to control (more below).

Therein lies another driver of this strategy: it is easier to regulate access to compute and model capability thresholds than to try to bottle up the genie. But therein lies one of the most salient paradoxes of AI regulation today.

The containment paradox

Since 2022, the U.S. has repeatedly tightened export rules on GPUs, forcing vendors to ship pared-down, Chinese market variants of flagship chips (e.g., NVIDIA’s A800, H800, and now H20) driven by the rationale that restricting access to compute will slow an adversary’s ability to train cutting-edge models. Yet halfway through 2025, China’s AI ecosystem has demonstrated resilience and the ability to achieve state-of-the-art performance despite compute choke points.

Necessity is the mother of invention. China is filling the gap left by OpenAI, Anthropic, and others in the open-source development space. DeepSeek R1 showed how distillation of large reasoning models into smaller models could compete or outperform GPT 4o and o1 on open benchmarks; Kimi K2 relies on sparse Mixture-of-Experts1 architectures to only use 32B of its 1T parameters at inference to deliver coding performance on par with GPT 4o, Claude 4, DeepSeek V3, and other foundation models.

An interesting containment analogy is the so-called Crypto Wars. In the 1990s, the U.S. DoD classified encryption hardware and software using keys longer than 40 bits as munitions under International Traffic in Arms Regulations to prevent “strong” encryption from empowering adversaries (state and non-state alike).2 The grassroots backlash (at a time when e-mail, e-commerce, and file-sharing were booming) led to the open-sourcing of Pretty Good Privacy (PGP) encryption as the standard, making strong encryption widely accessible and easily reproducible. Academic institutions simultaneously accelerated the development of more efficient cryptographic algorithms, rapidly moving beyond the 40-bit export restriction.

The same forces are at play here, but at a faster pace due to the iterative and decentralized footprint of the open-source AI developer ecosystem. For example, Hugging Face, which currently hosts over one million pre-trained, general-purpose AI models, ready to deploy with minor fine-tuning and minimal compute. The ease of use, speed of improvement, and low barrier to adoption can weaken export control mechanisms such as end-user certificates/licenses, deemed exports, etc.3

Virality fuels proliferation

In this AI wave (bubble, in my opinion), consumer-first apps dominate. June 2025 internal data from Andreessen Horowitz revealed that the median consumer AI startup reaches USD 4.2 million ARR in its first 12 months, outpacing the traditional USD 2 million median benchmark for enterprise startups. This is due in part to the commoditization of frontier capabilities: when companies that train or fine-tune their models see revenue spike whenever a new release drops, which makes fast iteration critical to securing funding.

“Given the rapid growth both AI-native B2B and B2C companies are achieving between Seed and Series A, startups looking to raise venture capital need a strong velocity story. If not yet in live commercial traction, then certainly in shipping speed and product iteration. Speed is becoming a moat.”

Consumer AI apps monetize fast and durably because they inhabit the most valuable real estate in tech: user home screens and everyday personal and professional workflows. Fast iteration is the path of least resistance to virality.

As Mary Meeker signals in her (now seminal) report, distribution (illustrated in blocks 4 and 5 above) will surpass model quality in moat-building as AI-native mobile interfaces (e.g., ChatGPT, Claude) and satellite networks extend Internet access to the 2.6 billion people still offline. Their first touchpoints may be through agent-driven interfaces that understand local language, context, and intent.

OpenAI understands that owning the end-user relationship is a strategic necessity. From its H1 25 strategy:

“To introduce an Al super assistant to the world, we need the right policy environment. This work is critical to the product because the cards are stacked against us; we're competing with powerful incumbents who will leverage their distribution to advantage their own products.”

Sam Altman lays out plans to grow ChatGPT into a super assistant “that knows and cares about its user” and “can help with any task that a smart, trustworthy, and emotionally intelligent person with a computer could do.”

As frontier capabilities commoditize and value shifts toward distribution, AI systems now reach consumers long before governments can produce sensible regulation, frameworks, or guidance, and well before compliance teams can complete risk assessments or submit export license applications.

The collective action problem intensifies as economic growth is increasingly driven by consumer demand for the latest AI products & services. Once proliferation is fueled by that demand rather than compute capabilities, no regulatory regime can restrict diffusion without incurring some degree of self-inflicted economic cost (Jamie Diamond might agree).

Both new market entrants and incumbents are incentivized to ship faster, scale wider, and consolidate market share more aggressively. Safety mechanisms like red-teaming or interpretability will further be seen as pure cost centers.

Policy that fails to anticipate this upstream shift in value accretion risks cedes control over emerging risks altogether. I believe global coordination on testing and evaluation standards may be our best bet to mitigate systemic risks and enable the safe deployment of AI in critical infrastructure to ensure long-term social value, trust, and economic stability.

In the next part of this essay, I make the case for an evaluation-first governance: why interoperable standards that can travel across jurisdictions, models emerging in the UK, U.S., EU, and China, and the product-layer testing as risk and value move into deployed systems.

Mixture of Experts is a machine learning technique that allows models to be pretrained with less compute (i.e., they can be scaled up the model or dataset size with the same compute budget, thereby achieving outputs comparable to the larger model it is trained on faster).

Even at the time, 40-bit was weak. 128-bit was a global standard.

This is not to say that export controls don’t have teeth. Frontier training still requires a cluster, and even if smaller/distilled models can close capability gaps, developing compute-intensive frontiers (e.g., multimodal agents with long-context windows in the tens of millions of tokens) still depends on hardware.